Understanding P-Values: A Guide for UK Students

A 'p-value' is something you've encountered if you've begun a study project, mainly one that includes records. It may additionally appear like just another number for your result section before everything else. However, in exercise, it's far more important in supporting you in determining whether or not your effects are only coincidental or have actual significance.

This guide was created in particular for UK college students who want to recognise p-values, the way to interpret them, and how to avoid some common errors. Whether you're reading biology, psychology, economics, or any other subject that includes data analysis, understanding p-values is essential. If you're looking for academic writing help, especially when it comes to explaining statistical results clearly, this guide will support you through the basics.

A P-value: what's it?

Let's begin with the fundamentals. A p-value is a figure that aids in determining whether or not your take a look at's findings are statistically significant. Simply positioned, it suggests the chance that, in the absence of a massive impact, the consequences you obtained could have passed off as a threat.

Consider carrying out a study to see if a single tea kind improves sleep quality. One group is given it, and their sleep is in comparison to that of any other organisation that changed and no longer receives the tea. Next, you determine the p-value.

-

A low p-value suggests that the two groups' differing sleep patterns are most probably not the result of risk.

-

A high p-value suggests that the tea may not have been effective and that the change may have certainly been the result of danger.

Knowing the Boundaries: What Is "Statistically Significant"?

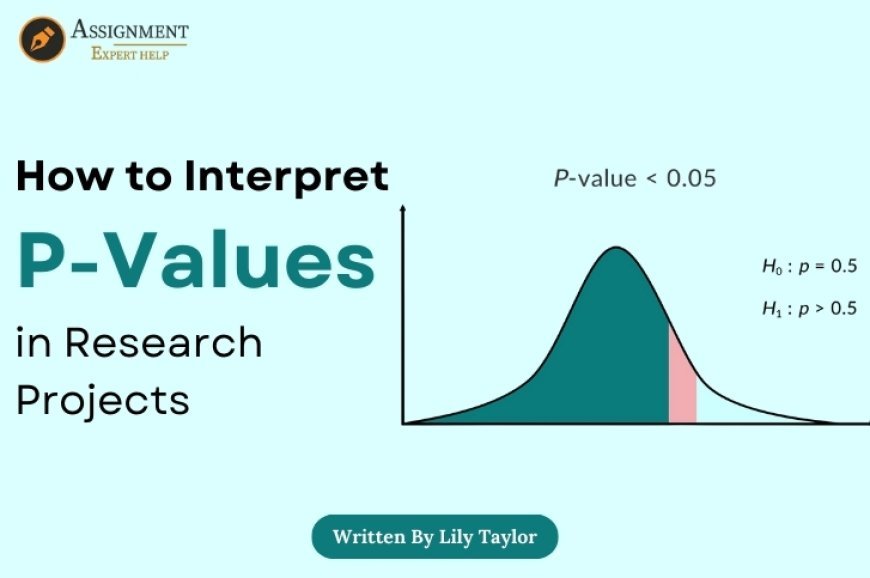

To determine if a p-value is "small enough", most studies employ a standard threshold. Typically, this is 0.05, or 5%.

What does this imply, then?

-

P-value < 0.05:

You think there's an effect; hence, you reject the null hypothesis. It is determined that your outcomes are statistically significant.

-

P-value < 0.05:

If you think there won't be an effect, you fail to reject the null hypothesis. Is there any statistical significance for your statistics?

It's important not to forget that this cutoff point is bendy. Because there are more repercussions for making a mistake, researchers in different fields – like medicinal drugs – use a tighter threshold (like 0.01).

A Real-Life Analogy from

A p-value may be in comparison to a building's smoke alarm.

-

You've made a mistake if the detector sounds a false alarm even if there isn't a fireplace.

-

The detector is effective if it sounds because of a fire.

The probability that your smoke detector (your statistical check) is elevating a false alarm is indicated with the aid of the p-value. For instance, a p-value of 0.03 shows a 3% probability that your finding is most effective as a fluke, just like a detector activating when there isn't a fireplace.

What Is Hidden Using A P-Value?

Because p-values are often misinterpreted, that is vital. What a p-value does not display is this:

-

It does not tell you how big the effect is.

A small p-value indicates that the effect is likely to exist, but does not imply that it is large.

-

It does not prove your hypothesis is true.

It simply means that the consequences aren't likely to be the result of danger.

-

It is not the probability that the null hypothesis is true.

That is a regular blunder. A p-value of 0.04 no longer indicates that the null speculation has a 4% hazard of being accurate. It indicates that if the null hypothesis had been accurate, there may be a 4% chance that your outcomes would be visible or maybe more intense.

UK Students Frequently Make Errors When Interpreting P-Values

Let's review some of the common errors that students at UK universities make when conducting research:

-

Considering 0.051 and 0.049 to be opposites

Despite being nearly the same, this sort of p-value is "full-size", even as the alternative is not. This demonstrates that the 0.05 criterion is useful advice instead of a tough-and-speedy rule. Always bear in mind context while interpreting outcomes; don't rely solely on arbitrary cut-offs.

-

Ignoring the Size of the Effect

Assume your take a look at has a p-value of 0.01; however, it has a very small effect size (the significance of the alternative). Your outcome may be noteworthy but meaningless. P-values must usually be stated with effect sizes.

-

Reporting just "Significant" effects

Trying several checks until you get a p-value under 0.05 is known as "p-hacking". It produces unpredictable effects and is bad science. Tell the truth about approximately everything you discover, not just the critical ones.

When is A High P-value suitable?

Assume you're investigating whether or not teaching techniques are identical. A high p-value (along with 0.78), which may be what you're trying to display, indicates that there's no evidence of a difference.

Therefore, a high p-value does not suggest that your study was a failure. It merely shows that you were unable to locate compelling proof to refute the null hypothesis. That should still be a useful discovery.

The Function of Sample Size

Many college students fail to comprehend that your sample size affects your p-value.

-

Even an extensive distinction may not be statistically significant with a small sample size, since there may be inadequate statistics to make a firm conclusion.

-

Even small, potentially insignificant versions may have statistical importance while a completely huge pattern is used.

Therefore, constantly recollect the appropriateness of your pattern size. Be cautious when deciphering p-values due to the fact that many undergraduate studies within the UK have constrained samples because of time and admission constraints.

How to Include P-values in Your Report or Dissertation

There are usually explicit hints on a way to present statistical results in educational settings within the United Kingdom. This is a regular layout:

-

"Group A and Group B's test outcomes differed appreciably (t(28) = 2.34, p = .026)."

Key pointers:

-

Put "p" in italics.

-

If the p-value is small, write "p < .001" instead of "p = 0.000."

-

Always include the degrees of freedom and the test statistic (such as t, F, or χ²).

-

Observe the formatting guidelines set forth by your department, such as APA, Harvard, etc.

Key Takeaways for Students in the United Kingdom

Here is a little checklist to remember:

-

If there's no actual impact, a p-value suggests how likely your outcomes are to be.

-

For statistical significance, the traditional cut-off is 0.05.

-

P-values don't quantify impact size or validate your theory.

-

Don't forget to include confidence durations and impact sizes.

-

When deciphering results, take context and sample size into account.

-

Steer clear of cherry-picking vital consequences or p-hacking.

Concluding Remarks: Be Careful, Not Just Technical

P-values are a beneficial tool; however, they are the handiest aspect of the study. Don't place all of your agreement within them. Add them to impact sizes, self-assurance periods, and—primarily—your understanding of the situation.

Stated differently, an able researcher does not just state that the p-value is much less than 0.05. They take into account the realistic implications of that outcome.

A thorough knowledge of p-values will allow you to confidently and accurately present your findings in any study-based project, including your dissertation and lab report. If you're seeking assignment writing help, understanding how to interpret statistical results like p-values is a key part of producing strong academic work. Additionally, keep in mind that achieving a "significant" outcome isn't your only goal — the real aim is to interpret the data in a way that contributes meaningfully to your field.

What's Your Reaction?